📊 Project Overview

This project demonstrates the usage of Bloom Filters for efficient spell-checking. A Bloom Filter is a space-efficient probabilistic data structure used to test whether an element is a member of a set. It is faster and requires much less space compared to other data structures like lists and sets. However, it comes with the trade-off of possible false positives.

In this project, we compare the performance of a Bloom Filter with a traditional list and set for spell-checking tasks using a large dictionary of words.

📁 Dataset

NLTK Words Dataset: A list of English dictionary words is used as the dataset. This dataset contains 236,736 words, and each word is added to the Bloom Filter for membership testing. Movie Reviews Dataset: The NLTK corpus movie_reviews is used to create a bag of words for positive and negative movie reviews. This data helps simulate real-world scenarios where a spell-checker is needed.

🚀 Key Features

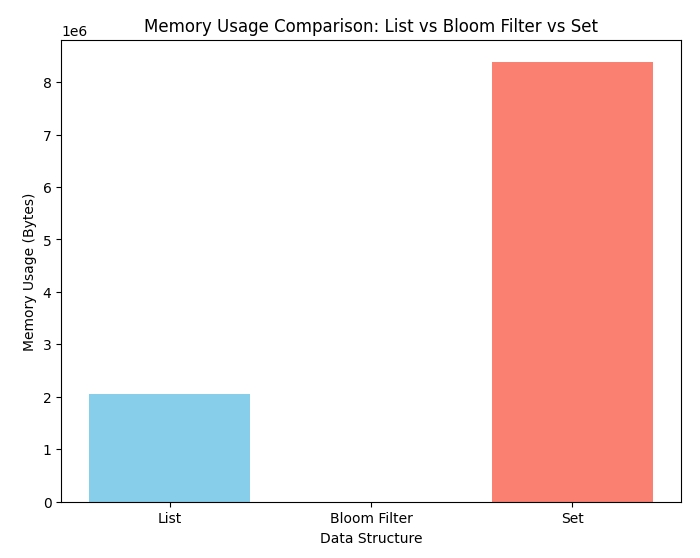

- Memory Efficiency: Bloom filters use significantly less memory compared to Python sets and lists, making them ideal for memory-constrained applications.

- Performance Comparison: Benchmarking between Bloom Filter, list, and set for checking if a word exists in the dictionary.

- Time Complexity: Showcases the time efficiency of Bloom Filters for membership tests.

📋 Steps to Run the Project

!pip install bloom_filter #Install the bloom_filter Library: Ensure you have the required package installed.

import nltk #Download NLTK Data: Download necessary datasets from the NLTK corpus.

Using the timeit command, we compare the time taken to check for the word “California” in a list, Bloom Filter, and set:

- List: 555 µs (average)

- Bloom Filter: 16.1 µs (average)

- Set: 54.9 ns (average) While a Python set is the fastest for exact membership testing, the Bloom Filter offers a great trade-off between memory usage and performance, especially in large-scale applications.

Creating and Using a Bloom Filter

from bloom_filter import BloomFilter

# Create a Bloom Filter

word_filter = BloomFilter(max_elements=236736)

# Add words to the filter

for word in word_list:

word_filter.add(word)

Memory Usage

from sys import getsizeof

# Check the memory usage of different structures

print(f'Size of word_list: {getsizeof(word_list)} bytes')

print(f'Size of word_filter: {getsizeof(word_filter)} bytes')

print(f'Size of word_set: {getsizeof(word_set)} bytes')

Performance Comparison Code

# Time comparison between list, Bloom Filter, and set for membership testing

%timeit "California" in word_list

%timeit "California" in word_filter

%timeit "California" in word_set

Spell Check Python Code

def spell_check(review_words, dictionary):

count = 0

for word in review_words:

if word not in dictionary:

count += 1

return count / len(review_words)